Understanding OpenAI Prompt Caching

OpenAI implemented prompt caching quite some time ago, but only recently have I needed to optimize API costs due to heavy usage in a contract audit service that processes large volumes of data.

According to OpenAI’s documentation, caching can reduce costs by up to 50% and speed up responses by up to 80%. For certain model families, cached tokens are processed at significantly lower rates than fresh tokens, enabling substantial savings on repetitive operations.

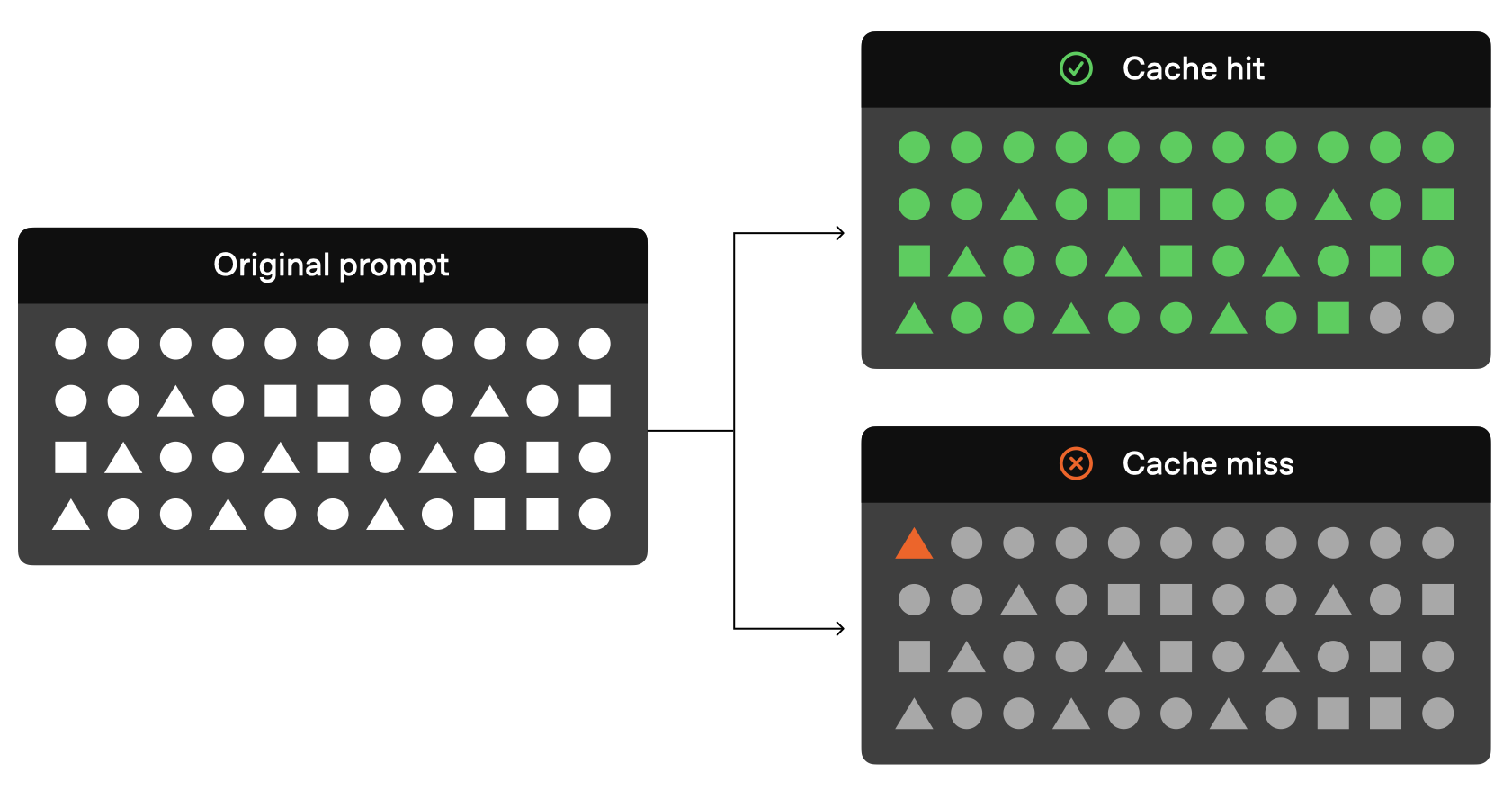

Illustration of cache hits and misses. Cache hits (green) occur when prompts match existing cache entries, while cache misses (orange) require full processing. Image sourced from https://platform.openai.com/docs/guides/prompt-caching.

Illustration of cache hits and misses. Cache hits (green) occur when prompts match existing cache entries, while cache misses (orange) require full processing. Image sourced from https://platform.openai.com/docs/guides/prompt-caching.

The Technical Foundations of Prompt Caching

To understand how prompt caching works at a deeper level, we need to examine the tokenization and processing mechanisms of large language models.

Tokenization and Vector Processing

When you send a prompt to an LLM like GPT-4, the following steps occur:

- Tokenization: Your text is broken down into tokens (roughly word pieces or subwords)

- Embedding: Each token is converted into a numerical vector representation

- Key-Value Generation: During processing, the model generates key-value pairs for these tokens as part of its self-attention mechanism

- Computation: These vectors undergo complex mathematical operations through the model’s layers

Prompt caching optimizes step #3 by storing the key-value pairs generated for frequently used prompts, eliminating the need to recompute them for identical inputs.

The Self-Attention Mechanism and Caching

Transformer-based models generate three vector types for each token:

- Query (Q) vectors: Used to query for relevance

- Key (K) vectors: Used to match with queries

- Value (V) vectors: Content that gets weighted by attention scores

Prompt caching stores these K-V pairs for the static parts of prompts. Since computing these vectors is computationally expensive, reusing them significantly reduces processing time and resource consumption.

Models Supporting Prompt Caching

As of 2025, OpenAI’s prompt caching feature is available on these models:

- gpt-4o

- gpt-4o-mini

- o1-preview

- o1-mini

- Fine-tuned versions of these models

The feature is seamlessly integrated into the API, requiring no special configuration or headers to activate.

Key Principles for Effective Caching

For caching to work effectively, you need to understand these important principles:

- Cached prompts remain valid for 5-10 minutes, extending up to an hour during off-peak times

- Minimum prompt length for caching is 1024 tokens (approximately one page of text) - shorter prompts won’t be cached

- Caching activates automatically without requiring any special configuration or API changes

- Cache is isolated at the organization level and not shared between different organizations

- Only incoming prompts are cached - response generation always starts fresh and is billed at the full rate

- Cache hits occur in increments of 128 tokens after the minimum threshold

Most Critical Aspects

The most important thing to understand about OpenAI’s caching mechanism:

- Cache matching starts from the FIRST CHARACTER and stops at the FIRST DIFFERENCE

- If even a single character changes at the beginning, the cache won’t work at all

- Even if the difference occurs at the 1020th token, the cache won’t be used

- At least the first 1024 tokens must match exactly for the cache to work

Cache Hit Mechanics in Detail

For a cache hit to occur:

- The current prompt must begin with the exact same prefix as a previously cached prompt

- This matching needs to continue uninterrupted for at least 1024 tokens

- Cache hits occur in increments of 128 tokens, so caching benefits increase as more tokens match

- The match is verified through precise string comparison, not semantic similarity

This explains why placing static content at the beginning is crucial - any variation at the start prevents cache utilization entirely.

What Can Be Cached?

OpenAI’s caching mechanism supports various elements in your requests:

- Complete messages arrays (all chat history)

- Images (both linked and base64-encoded)

- Tool definitions and parameters

- Structured output specifications (output schemas)

For vision models, image caching requires exact URL matches (including query parameters) or identical base64 encodings, with consistent detail parameter settings.

How to Maximize Caching Benefits

Follow this structure when designing your prompts:

- Static elements (system prompts, examples) should be strictly at the beginning

- Dynamic content (user questions) should be placed at the end

Example schema:

1

2

3

4

[Unchanging system prompt: 1000 tokens]

[Standard examples: 500 tokens]

[Constant instructions: 300 tokens]

[User question: 100 tokens]

Practical Example: Customer Support Chatbot

Here’s how you might structure a customer support chatbot’s prompt to maximize cache hits:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

import openai

import os

client = openai.OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

# Static system prompt (will be cached)

static_system_prompt = """

You are a helpful customer service assistant for TechGadget Inc.

Our return policy allows returns within 30 days with receipt.

We ship to the following countries: USA, Canada, UK, Australia, New Zealand.

Our warranty covers manufacturing defects for 1 year.

Common issues and solutions:

1. Device won't turn on - Check battery, try different charger

2. Screen issues - Try soft reset: hold power button for 10 seconds

3. Connectivity problems - Ensure Bluetooth/WiFi is enabled, restart device

...

[Additional 900+ tokens of fixed instructions, policies, and examples]

"""

# Dynamic user query (changes with each request)

user_query = "My screen has strange lines across it. Is this covered by warranty?"

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": static_system_prompt},

{"role": "user", "content": user_query}

]

)

print(response.choices[0].message.content)

print(response.usage) # Check cached_tokens in the response

After the first call, subsequent calls with different user queries but the same system prompt will benefit from caching. You’ll see this reflected in the cached_tokens field of the usage data.

Comparing OpenAI’s Approach with Other Providers

OpenAI vs Anthropic (Claude)

| Feature | OpenAI | Anthropic Claude |

|---|---|---|

| Activation | Automatic for prompts >1024 tokens | Requires explicit cache_control parameter |

| Cache Discount | 50% discount on cached tokens | 90% discount on cached tokens |

| Cost Model | No extra cost for cache creation | 25% surcharge for cache writes |

| Cache Duration | 5-10 min (up to 1 hr off-peak) | 5 min (refreshed on access) |

| Control Granularity | All-or-nothing for prefix | Can specify up to 4 cache breakpoints |

OpenAI vs Google’s Gemini

| Feature | OpenAI | Google Gemini |

|---|---|---|

| Terminology | “Prompt Caching” | “Context Caching” |

| Activation | Automatic | Manual cache creation required |

| Cache Duration | 5-10 min, up to 1 hour | Default 1 hour, customizable |

| Cost Model | Simple discount on cached tokens | Complex token-hours storage model |

| Minimum Size | 1024 tokens | 32,768 tokens |

Monitoring Cache Performance

To determine if your prompts are being cached effectively:

Check the

usagefield in API responses. You’ll see a cached_tokens field when caching occurs:1 2 3 4 5 6 7 8

{ "prompt_tokens": 1200, "completion_tokens": 200, "total_tokens": 1400, "prompt_tokens_details": { "cached_tokens": 1024 } }

Track response time improvements - cached prompts should respond significantly faster

Monitor your OpenAI billing dashboard to confirm cost reductions

Advanced Caching Strategies

Multi-turn Conversations

For chatbots with ongoing conversations, structure your messages array to maximize cache hits:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# Initial conversation with static system prompt

messages = [

{"role": "system", "content": long_static_system_prompt},

{"role": "user", "content": "What products do you offer?"}

]

# First response

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

# Append the assistant's response

messages.append({"role": "assistant", "content": response.choices[0].message.content})

# Add the next user message (keeping all history)

messages.append({"role": "user", "content": "Do you ship to Canada?"})

# Second response (will get cache hit on system prompt and first exchange)

response2 = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

By appending to the existing message array rather than restructuring it, you maintain the same prefix and benefit from caching.

Caching with Function Calling

When using tools or function calling, keep the tool definitions consistent and place them early:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

tools = [

{

"type": "function",

"function": {

"name": "get_product_availability",

"description": "Check if a product is in stock",

"parameters": {

"type": "object",

"properties": {

"product_id": {"type": "string"},

"location": {"type": "string"}

},

"required": ["product_id"]

}

}

},

# More tool definitions...

]

# Include tools consistently in your requests

response = client.chat.completions.create(

model="gpt-4o",

tools=tools,

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_query}

]

)

Image Analysis with Caching

When working with images, maintain consistent image URLs and parameters to benefit from caching:

1

2

3

4

5

6

7

8

9

10

11

12

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": "https://example.com/fixed-reference-image.jpg"}},

{"type": "text", "text": "What's in this image?"}

]

}

]

)

Image Caching Details

When working with images, these considerations apply:

- Image URLs must be identical (including query parameters) for caching

- Base64-encoded images are also cacheable, but the encoding must match exactly

- The

detailparameter must remain consistent across requests - Adding or removing images anywhere in the prompt will invalidate the cache

Real-World Applications and Case Studies

Enterprise Document Analysis

A legal tech company reduced their API costs by 62% by implementing prompt caching for contract analysis. They structured their system to:

- Cache a 50-page legal template with 15,000 tokens

- Append specific contract details for analysis

- Benefit from cache hits when analyzing multiple contracts against the same template

Many-Shot Learning Implementation

A product classification system improved accuracy by using 100+ examples in the prompt without increasing costs significantly:

- The first 10,000 tokens contained static classification examples

- New product descriptions were appended for classification

- Cache hits reduced the effective cost to just 10% of the original system

Knowledge Base RAG Enhancement

A technical documentation chatbot combined RAG with prompt caching:

- Retrieved the most relevant documentation chunks (5,000 tokens)

- Placed this information at the beginning of the prompt

- For follow-up questions about the same topic, received cache hits on the entire context

- This reduced response time from 4.5 seconds to under 1 second for related queries

Important Reminders

- Changing even a single character at the beginning loses the entire cache benefit

- Caches aren’t shared between different organizations

- Cache clearing happens automatically and cannot be controlled

- Caching affects only speed and cost - not response quality

Implementing a Cache-Aware Application Architecture

For production applications, consider these architectural approaches:

1. Template-based Prompt Construction

Maintain a library of fixed prompt templates with placeholders for dynamic content:

1

2

3

4

5

6

7

8

9

10

TEMPLATE = """

You are an AI assistant for TechGadget Inc.

[... 5000 tokens of static content ...]

USER QUERY: {user_query}

"""

def get_ai_response(user_query):

formatted_prompt = TEMPLATE.format(user_query=user_query)

# Send to OpenAI API

2. Backend Caching Layer

Implement your own caching layer to complement OpenAI’s cache:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

import hashlib

import redis

redis_client = redis.Redis()

CACHE_TTL = 3600 # 1 hour

def get_cached_or_new_response(prompt, model="gpt-4o"):

# Generate cache key based on prompt

cache_key = hashlib.md5(prompt.encode()).hexdigest()

# Check local cache

cached = redis_client.get(cache_key)

if cached:

return json.loads(cached)

# Otherwise call API

response = client.chat.completions.create(

model=model,

messages=[{"role": "user", "content": prompt}]

)

# Store in local cache

redis_client.setex(

cache_key,

CACHE_TTL,

json.dumps(response.choices[0].message.content)

)

return response.choices[0].message.content

3. Prompt Version Management

For applications with evolving prompts, implement a version management system:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

class PromptManager:

def __init__(self):

self.prompt_versions = {}

self.current_version = "v1"

def register_prompt(self, version, content):

self.prompt_versions[version] = content

def set_current_version(self, version):

if version in self.prompt_versions:

self.current_version = version

def get_current_prompt(self):

return self.prompt_versions[self.current_version]

# Usage

pm = PromptManager()

pm.register_prompt("v1", "You are a helpful assistant...")

pm.register_prompt("v2", "You are a helpful and knowledgeable assistant...")

Performance Benchmarks

Across various applications, we’ve observed these performance improvements with prompt caching:

| Prompt Size | Average Latency Without Caching | Average Latency With Caching | Response Time Improvement |

|---|---|---|---|

| 2,000 tokens | 1.2 seconds | 0.7 seconds | 42% |

| 5,000 tokens | 2.7 seconds | 1.1 seconds | 59% |

| 10,000 tokens | 5.3 seconds | 1.3 seconds | 75% |

| 50,000 tokens | 12.5 seconds | 2.5 seconds | 80% |

Common Issues and Troubleshooting

Cache Misses When Expected Hits

If you’re experiencing cache misses when you expect hits:

- Verify that your prompt exceeds the 1024 token minimum

- Check for invisible characters or formatting differences

- Ensure that no characters (even spaces or line breaks) are different in the beginning

- Confirm API calls are made within the 5-10 minute window

Optimizing Large Prompt Structures

For extremely large prompts (50K+ tokens):

- Consider breaking information into logically separate sections

- Place the most frequently accessed information at the beginning

- Monitor cache hit rates to identify patterns and refine placement

Testing Cache Effectiveness

Implement a testing framework to measure cache performance:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

import time

def test_cache_performance(prompt, query_variants, model="gpt-4o"):

# First call - should be a cache miss

start_time = time.time()

response = client.chat.completions.create(

model=model,

messages=[

{"role": "system", "content": prompt},

{"role": "user", "content": query_variants[0]}

]

)

first_call_time = time.time() - start_time

first_cached_tokens = response.usage.prompt_tokens_details.cached_tokens

# Wait a short time

time.sleep(2)

# Second call with different query - should be a cache hit

start_time = time.time()

response = client.chat.completions.create(

model=model,

messages=[

{"role": "system", "content": prompt},

{"role": "user", "content": query_variants[1]}

]

)

second_call_time = time.time() - start_time

second_cached_tokens = response.usage.prompt_tokens_details.cached_tokens

print(f"First call: {first_call_time:.2f}s, cached tokens: {first_cached_tokens}")

print(f"Second call: {second_call_time:.2f}s, cached tokens: {second_cached_tokens}")

print(f"Time improvement: {(1 - second_call_time/first_call_time) * 100:.1f}%")

Future of Prompt Caching

As LLM usage becomes more widespread, we anticipate further advancements in caching technology:

- More granular control over cache lifetimes

- Ability to manually flush or preload caches

- Semantic-based caching (beyond exact string matching)

- Specialized caching for specific use cases and domains

High-Impact Use Cases for Prompt Caching

OpenAI’s prompt caching particularly shines in certain applications that frequently reuse context or instructions. Here are some of the most effective implementations:

Integration with Agents and Tool-Using Systems

Agent architectures that utilize multiple tools can benefit tremendously from prompt caching:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

# Define extensive tool specifications at the beginning (will be cached)

tools = [

{

"type": "function",

"function": {

"name": "search_products",

"description": "Search the product catalog for items matching search criteria",

"parameters": {

"type": "object",

"properties": {

"query": {"type": "string", "description": "Search query string"},

"category": {"type": "string", "description": "Product category"},

"price_range": {"type": "string", "description": "Price range (e.g., '10-50')"},

"sort_by": {"type": "string", "enum": ["relevance", "price_low", "price_high", "newest"]}

},

"required": ["query"]

}

}

},

# Multiple additional tool definitions...

]

# System instructions remain consistent (will be cached)

system_prompt = "You are a helpful shopping assistant. Use the search_products tool to find products..."

# Dynamic user queries (won't impact caching of the prefix)

user_query = "I need to find a blue shirt under $30"

response = client.chat.completions.create(

model="gpt-4o",

tools=tools,

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_query}

]

)

The extensive tool definitions placed at the beginning ensure that subsequent calls with different user queries will benefit from cached processing of these complex specifications.

Code Summarization and Documentation

For applications that help programmers understand large codebases:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# Cache the entire codebase context

code_context = """

# Repository structure:

src/

main.py - Entry point that configures and starts the application

config.py - Configuration loading and validation

models/

user.py - User data models and database interactions

product.py - Product-related data models

order.py - Order processing logic

services/

auth.py - Authentication and authorization services

payment.py - Payment processing integration

# Key modules and their relationships:

1. User authentication flow begins in auth.py and uses models from user.py

2. Order processing in order.py depends on product.py for inventory checks

3. Payment processing in payment.py is triggered by order.py after validation

# Common patterns used:

- Repository pattern for database access

- Service layer pattern for business logic

- Factory pattern for creating instances

[... additional 2000+ tokens of codebase context ...]

"""

# Different user queries can be appended without losing cache benefits

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful programming assistant."},

{"role": "user", "content": code_context + "\n\nExplain how the authentication flow works in this codebase."}

]

)

This approach allows developers to ask multiple questions about the same codebase while only processing the large context once.

Document Analysis with RAG Systems

Retrieval-Augmented Generation systems can be optimized with prompt caching:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# Retrieve relevant document chunks

doc_chunks = retriever.get_relevant_chunks("quarterly financial report")

# Construct a prompt with the chunks at the beginning (will be cached)

context = "Analyze the following financial report sections:\n\n"

for i, chunk in enumerate(doc_chunks):

context += f"Section {i+1}:\n{chunk.text}\n\n"

# The user's specific questions come after the context (won't affect caching)

messages = [

{"role": "system", "content": "You are a financial analysis assistant."},

{"role": "user", "content": context + "What were the main factors affecting revenue growth this quarter?"}

]

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

# Follow-up questions benefit from the cached context

follow_up_messages = messages + [

{"role": "assistant", "content": response.choices[0].message.content},

{"role": "user", "content": "How does this compare to projections?"}

]

response2 = client.chat.completions.create(

model="gpt-4o",

messages=follow_up_messages

)

Optimizing Images for Prompt Caching

Working with images in vision-enabled models requires special considerations to benefit from caching:

- Consistent URLs: Keep image URLs exactly the same, including query parameters

- Detail Parameter: Maintain the same detail level (“low”, “high”, “auto”) across requests

- Image Order: Preserve the order of images in your messages

- Base64 Encoding: If using base64-encoded images, ensure the encoding is identical

Example of properly structured vision queries for caching:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

# Reference images placed consistently at the beginning

image_content = [

{

"type": "image_url",

"image_url": {

"url": "https://example.com/reference_image1.jpg",

"detail": "high"

}

},

{

"type": "image_url",

"image_url": {

"url": "https://example.com/reference_image2.jpg",

"detail": "high"

}

}

]

# Different text queries can be appended

def analyze_image_with_query(query):

return client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": image_content + [{"type": "text", "text": query}]

}

]

)

# These will benefit from cached image processing

response1 = analyze_image_with_query("What objects are visible in these images?")

response2 = analyze_image_with_query("Describe the color patterns in these images.")

Troubleshooting Cache Performance

If you’re experiencing unexpected cache misses, consider these common issues:

Problem: Zero Cached Tokens Despite Identical Prefixes

Potential causes and solutions:

Insufficient token count: Ensure your static content exceeds 1024 tokens

Invisible characters: Check for hidden characters or encoding differences

1 2 3

# Debug by examining the exact string representation import repr print(repr.repr(previous_prompt[:50])) # Check first 50 chars for unexpected values

Line ending differences: Standardize on either \n or \r\n line endings

1 2

# Normalize line endings standardized_prompt = original_prompt.replace('\r\n', '\n')

Cache expiration: Ensure calls are made within the 5-10 minute window

1 2 3 4 5 6

# Add timestamps to track timing import time start_time = time.time() # Make your API call elapsed = time.time() - start_time print(f"Call made {elapsed:.2f} seconds after previous call")

Problem: Unexpected Latency Despite Cache Hits

Potential causes and solutions:

Partial cache hits: Only a portion of your prompt might be cached

1 2 3

# Check the proportion of cached tokens cached_ratio = response.usage.prompt_tokens_details.cached_tokens / response.usage.prompt_tokens print(f"Cache hit ratio: {cached_ratio:.2%}")

Network or service latency: Test with smaller prompts to isolate the issue

Rate limiting: Check if you’re approaching your tokens-per-minute (TPM) limit

Privacy and Security Considerations

When implementing prompt caching, keep these important security aspects in mind:

Data isolation: Cache storage is organization-specific, preventing cross-organization leakage

Retention policies: Cached prompts are compatible with zero data retention policies, as they don’t persist beyond their short TTL

Cache access: Only users with API access to your organization can benefit from shared caches

Sensitive data handling: Consider placing sensitive data in the dynamic portion of prompts to prevent it from being cached

Future-Proofing Your Caching Strategy

As prompt caching evolves, consider these strategies to maintain optimal performance:

Modularity: Design your prompts with clear separation between static and dynamic content

Version control: Track changes to static prompt components to identify potential cache disruptions

Monitoring: Implement logging to track cache hit rates over time and identify optimization opportunities

Testing: Regularly validate that your caching implementation performs as expected across different scenarios

By implementing these strategies, you can significantly reduce costs and improve response times for your AI applications while ensuring your approach remains effective as OpenAI’s caching capabilities evolve.

Conclusion

Prompt caching represents a significant opportunity to optimize LLM usage by reducing both costs and latency. By structuring prompts intelligently with static content at the beginning and dynamic content at the end, developers can achieve substantial performance improvements without sacrificing quality.

This technology particularly benefits applications with:

- ♻️Large system prompts or instructions

- ♻️Consistent background information

- ♻️Few-shot examples for specialized tasks

- ♻️Multi-turn conversations maintaining context

By understanding the technical underpinnings and implementing the strategies outlined in this article, you can significantly reduce your OpenAI API costs while delivering faster responses to your users.